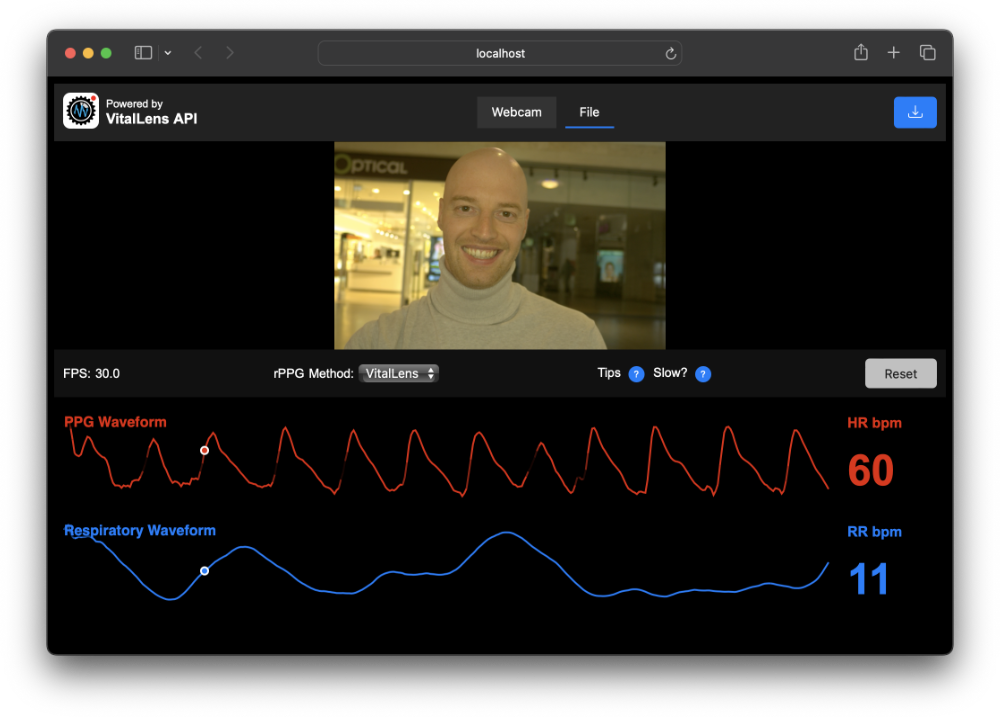

Remote video-based vital sign monitoring

Physiological processes such as blood flow and respiration leave behind subtle visual cues. These cues include changes in skin color and upper body movement, which can be picked up by ordinary cameras.

This means that it is possible to monitor vital signs such as heart rate, HRV, and respiratory rate remotely using everyday devices like smartphones.

Frequently asked questions

Remote photoplethysmography (rPPG for short) is a camera-only way of

reading pulse-related colour changes in your face. Each heartbeat

shifts the balance of red and green light reflected by skin; a

neural network tracks those micro-changes frame-by-frame,

cancels motion and lighting swings, and rebuilds the same

photoplethysmogram waveform you'd get from a fingertip sensor.

From that waveform it derives heart rate, HRV, breathing rate and other

vitals in real time. Learn more in our

rPPG explainer article.

In its current public release, the VitalLens model was trained on 1413 subjects across all six Fitzpatrick skin

types. On a large, diverse test benchmark including Vital Videos, it posts

average errors of 1.57 bpm for heart rate, 1.08 bpm for respiratory rate, and 10.18 ms for HRV (SDNN).

Learn more in our technical report paper.

Yes. Training data includes a dedicated Africa set, so Fitzpatrick

types 5-6 see only a small drop in SNR compared with lighter tones.

App: All processing and raw video stay on-device—nothing

leaves your phone.

API: You only upload pre-processed (pixelated & audio-removed) frames; those and the resulting vitals live briefly in server RAM and are deleted immediately per our Terms of Service.

API: You only upload pre-processed (pixelated & audio-removed) frames; those and the resulting vitals live briefly in server RAM and are deleted immediately per our Terms of Service.

What our users say

Featured blog articles

Our mission

Empowering global health through innovative, privacy-centric health monitoring solutions via user-friendly smartphone applications and robust APIs.